25 years of accuracy-first epistemology - Part 2

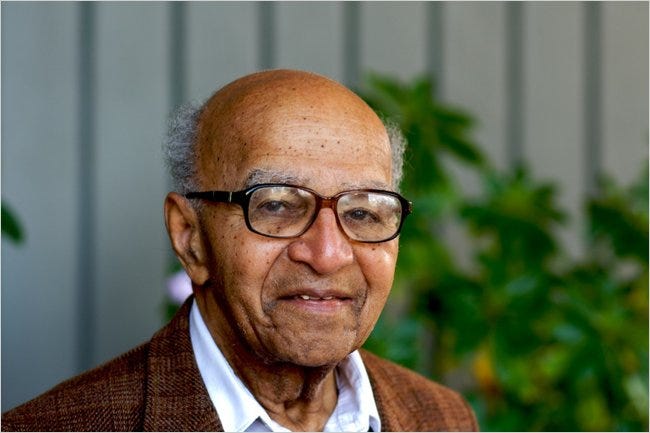

Graham Oddie on experimentation and responding to evidence

I trust that, among your new year resolutions, you committed to forming more accurate credences, as measured by the sort of strictly proper scoring rule that I introduced at the end of the previous post in this series. In that endeavour, I wish you good luck!

In this post, I’m going to look at the second of the two papers that really launched accuracy-first epistemology: the breezy and alliterative nine-page ‘Conditionalization, Cogency, and Cognitive Value’, by Graham Oddie, published in The British Journal for the Philosophy of Science. As I mentioned in the previous post, this paper was published in 1997, and so preceded Jim Joyce’s paper on Probabilism by a year. What’s more, it appeals to the property of strict propriety; and, as I said in the previous post, this is the property of scoring rules that is most widely assumed in the accuracy-first literature today. But Joyce’s paper has been more influential, and the norm for which it argues is in some sense more basic that the norms for which Oddie argues. What’s more, while Oddie’s argument in favour of experimenting is complete, the argument for Conditionalization, at which he gestures at the end of the paper, is not quite complete. It was completed by Hilary Greaves and David Wallace nine years later in 2006, in their ‘Justifying Conditionalization’, another of the foundational papers of the topic, which we’ll meet again below.

Oddie’s paper begins by noting that one could satisfy the two central norms of Bayesian epistemology, Probabilism and Conditionalization, without ever gathering any new evidence and without ever changing one’s credences. Probabilism tells you how your credences in different propositions should relate to one another at a given time, while Conditionalization tells you how your credences should change over time, depending on the evidence you gather. But neither actually requires you to gather any evidence. And, if you don’t, Conditionalization just tells you to stick with the credences you have. But surely we are rationally required to gather evidence? Surely a life spent obtaining no new information and never changing our credences is not a fully rational life? Surely it is suboptimal in some way? It is this thought that Oddie seeks to vindicate.

He begins by noting that there is a vindication of the thought available. Alongside Probabilism and Conditionalization, which tell you how your credences should behave, Bayesians typically also accept a decision rule, namely, Maximize Expected Utility, which says that, when faced with a decision under uncertainty, you should choose an option with maximal expected utility by the lights of the credences you have and your utilities. And, Oddie notes, the work of the statistician David Blackwell and the mathematician I. J. Good has furnished us with the resources to argue from that decision rule, together with Probabilism and Conditionalization, to the conclusion that we very often are rationally required to gather evidence.

He is referring to Good’s Value of Information Theorem. The idea is this: Suppose you’ll face a decision a little down the line—perhaps you’ll have to decide whether to take an umbrella or not when you leave for work. And suppose that, between now and then, you can obtain some information—perhaps you can look at the Met Office app on your phone and see what it predicts. You know that, when you face the decision a little down the line, you’ll choose by appealing to the credences you have at that time. Indeed, you’ll choose an option that maximizes expected utility by the lights of those credences, just as Maximize Expected Utility tells you to do. You also know that, if you don’t look at the Met Office app, your credences at that later time will be the same as the ones you have now—you’ll have gained no new evidence, so they’ll stay the same. But if you do look at the app, your credences might well change: you might get evidence that makes you more confident in certain things and less confident in others. So really, when you choose whether or not to obtain the new information, you’re really deciding with which credences you’ll face the decision later. So, we might say that, relative to a decision you’ve got to make, the pragmatic utility, at a given state of the world, of having some credences is the utility, at that state of the world, of whichever option you’ll pick if you choose using those credences.

So now we have a way of assessing the pragmatic value of gathering the information available and the pragmatic value of not gathering it. The pragmatic value, at a state of the world, of gathering the information is the utility of the option you’ll pick at that state of the world if you choose using the credences you will have if you learn whatever information is true at that world and update your prior credences on it. And the pragmatic value, at a state of the world, of not gathering the information is the utility, at that state of the world, of the option you’ll pick if you choose using the credences you currently have.

Now, what Good showed was that, from the point of view of your prior credences, the expected pragmatic value of gathering the information is never less than the expected pragmatic value of not gathering the information, and very often it’s strictly greater. How often? Well, providing you give at least some positive credence to the possibility that, if you gather information, you’ll learn something that will make you choose differently from how you’d choose using your prior.

Now, as Oddie notes, this only tells you that you’re rationally required to gather information if it is relevant to future decisions you will face. If it’s never relevant to any future decision you’ll face, learning it will never change how you’ll choose and so it will never increase the expected pragmatic value of your credences. So, he seeks a way of arguing that you should gather information even when it doesn’t have practical import. And his strategy is to replace the notion of the pragmatic value of credences with the notion of their cognitive or epistemic value. He doesn’t talk about accuracy, as Joyce does, but accuracy would be one possible account of the cognitive or epistemic value of credences—though of course it’s not the only account, and indeed Oddie later argued that Joyce’s account of cognitive value is incomplete.

The final piece of Oddie’s argument, which needs to be in place before we can state the central result of his paper, is his justification for focusing on strictly proper scoring rules as measures of cognitive or epistemic value. I think his argument doesn’t work, but it’s intriguing, and it forms the basis for an argument that Joyce himself later favoured when, in 2009, he started focusing on strictly proper scoring rules as measures of epistemic value, rather than the accuracy measures he described in his 1998 paper.

Oddie’s argument begins with the following principle:

Conservatism: Absent any new information, you should not change your cognitive state.

By cognitive state, he means your credences. Then he argues as follows: Suppose our measure of cognitive value were not strictly proper. Then there would be some probabilistic credence function that expects some alternative credence function to be better than it expects itself to be. But then, if you were to have the first credence function, you would be required to move away from it, perhaps to the alternative or to some other credence function you expect to do better than yourself, even though you had not gathered any new information. And this is contrary to what Conservatism requires.

The problem with this argument is that Conservatism isn’t true as stated. The true principle in the vicinity is this:

Conservatism*: Absent any new information, and providing your cognitive state is rationally permissible, you should not change your cognitive state.

For it is perfectly reasonable to change your cognitive state absent new information if that cognitive state is irrational; and it is perfectly reasonable to use a measure of cognitive value that demands you do so. To get strict propriety from Conservatism*, you need to assume that all probabilistic credence functions are rationally permissible. And of course that is contested. When Joyce resurrected this argument in 2009, he contribution was precisely to include an argument that all probabilistic credence functions are rationally permissible.

In any case, I’ll leave this because I’ll be coming back to arguments for strict propriety in later posts. Let’s just grant it here. Then Oddie’s central result is this: if you measure cognitive value using a strictly proper scoring rule, then learning new evidence will never decrease the cognitive value of your credences in expectation, and very often it will increase it in expectation. How often? Whenever you assign positive credence to the possibility you’ll learn evidence that will lead you to change any of your credences, even if only very slightly—so, essentially, as long as it’s possible you’ll learn something of which you weren’t already certain, then learning improves your cognitive state in expectation.

Let’s unpack this: when I was presenting Good’s Value of Information Theorem, I said that, relative to a decision problem, the pragmatic value, at a given state of the world, of some credences is the utility, at that state of the world, of whichever option in the decision problem maximizes expected utility from the point of view of those credences. And then the pragmatic value, at a state of the world, of gathering information, is the pragmatic value, at that state, of the posterior credences you’ll have after you learn whatever is true at that world and then update on that new information by conditionalizing on it, as the Bayesian tells you to do. While the pragmatic value, at a state of the world, of not gathering information is the pragmatic value at that state of your prior credences, which will also be your posteriors, since you don’t gather any evidence. Similarly, then, we say that the cognitive value, at a given state of the world, of gathering information, is the cognitive value at that state of the posterior credences you’ll have after you conditionalize on whatever evidence you learn at that world. While the cognitive value, at a state of the world, of not gathering information is the cognitive value at that state of your prior credences, which will also be your posteriors, since you don’t gather any new evidence. With this defined, we can then define the expected cognitive value of gathering information and the expected cognitive value of not gathering information. Oddie’s central result is this: the former is always at least as great as the latter, and strictly greater when your priors give positive credence to the possibility of learning evidence that will change your credences.

That’s a really neat result in itself, and it’s since been discussed and expanded by Wayne Myrvold and Kevin Dorst and his co-authors. But Oddie tops it off with an intriguing suggestion in the final section. In the argument so far, we assume that you update by conditionalizing on new evidence, and show that, if that’s what you’ll do with it, getting new evidence is nearly always beneficial epistemically speaking, and never detrimental. But, Oddie asks, can we perhaps reverse the argument? That is, instead of assuming we’ll update by conditionalizing and showing that gaining evidence is desirable, we assume that gaining evidence is desirable and show that this is only true if we’ll respond to that evidence by conditionalizing on it. But alas this isn’t true. There are other ways to respond to evidence that will make it desirable to gather evidence. That’s not too surprising: you just update by something very close to conditionalizing, and then gathering evidence and responding to it in this way gets you enough of the benefits of gathering it and conditionalizing that it surpasses not gathering.

However, it turns out that an argument for conditionalizing really does lie close by. The proof of the epistemic version of Good’s Value of Information Theorem is naturally adapted into a proof of a more general result: of all the ways of responding to new evidence, the one that maximizes expected cognitive value is conditionalizing, providing you measure cognitive value using a strictly proper scoring rule. That is, of all the ways you might respond to new evidence you acquire, conditionalizing is the one that has greatest expected cognitive value from the point of view of your priors. That was the conclusion of Hilary Greaves and David Wallace’s paper, ‘Justifying Conditionalization’. And there is an interesting parallel with the development of Good’s Value of Information Theorem on the pragmatic side, for in 1978, Peter M. Brown adapted Good’s proof to show that conditionalization is the method of updating on new evidence that maximizes expected pragmatic utility from the point of view of your prior. So Good : Brown :: Oddie : Greaves & Wallace.

As regards priority, what does Good add to Blackwell, who (I think) was first by a couple of decades